The era of “generative magic” is officially over. In 2023, the tech world lost its mind because a machine wrote a poem.

By 2026, that novelty has curdled into a ruthless demand for ROI. We are seeing a brutal split in the engineering workforce. On one side, you have “wrapper” developers still clinging to fragile API calls.

On the other side are the AI Systems Engineers. These professionals don’t guess at prompts; they program them. They don’t build straight lines; they architect cyclic graphs.

The Bottom Line

Stop writing prompts manually; compile them with DSPy. Abandon linear chains for state machines using LangGraph. Vector search fails on logic; mastery of GraphRAG is now mandatory. Security is a code-level dependency (CVE-2025-68664), and you must learn

torch.compileto survive inference costs.

The “Real-World” Context: The 2026 Shift

The landscape hasn’t just shifted; it has bifurcated. We are seeing a hard divergence between “toy” demos and production systems.

Three critical updates are driving this change. First, the “Prompt Engineering” bubble has finally burst. With the release of DSPy 3.1.0 in January 2026, the industry acknowledged that manual tweaking is unscalable.

We now treat prompts as weights, optimizing them mathematically rather than linguistically.

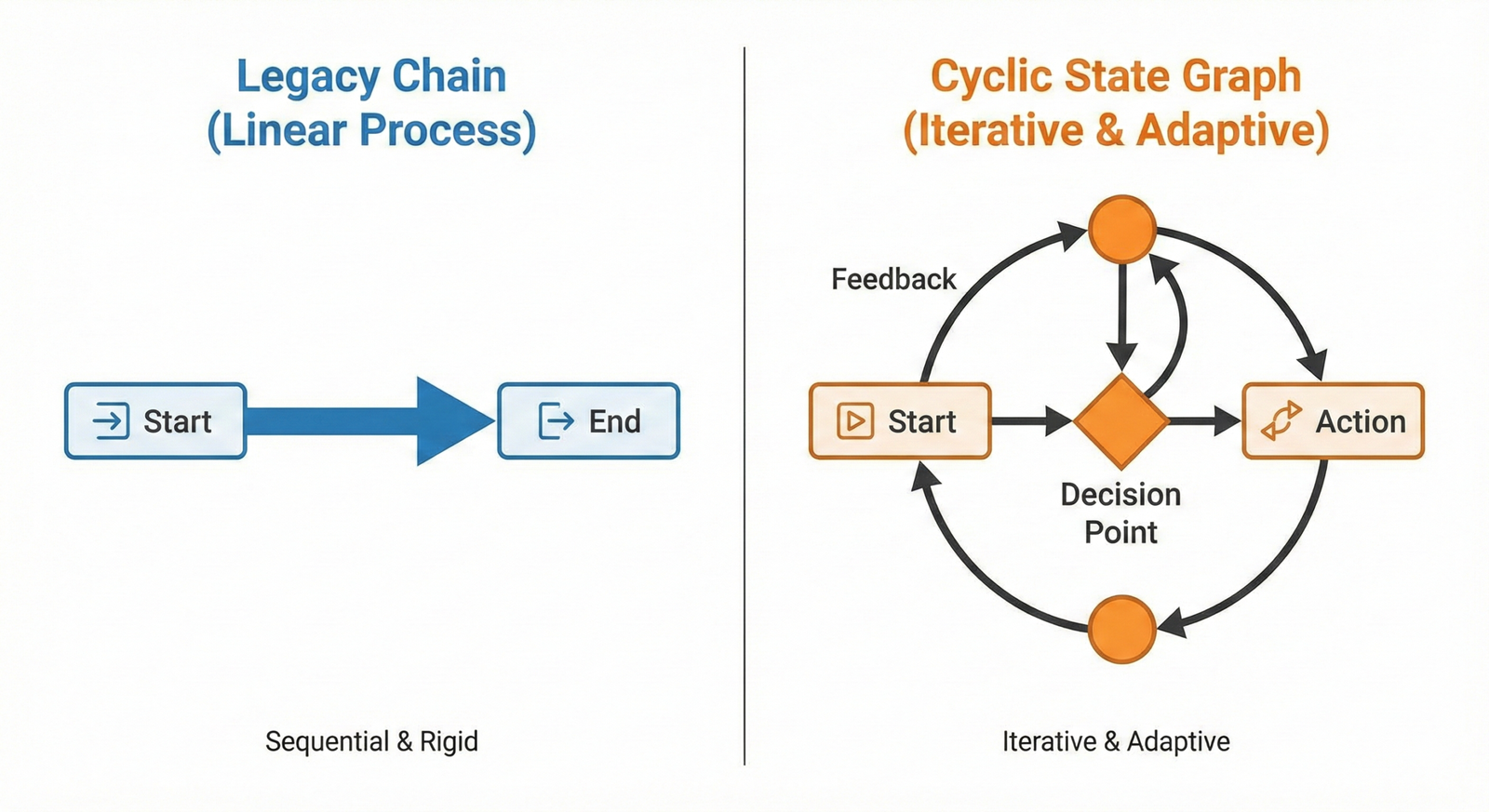

Second, static chains are dead. The release of LangGraph 1.0.6 enforces a move to cyclic, stateful architectures. Linear workflows simply cannot handle the retries required for agentic behavior.

But here is the uncomfortable truth about data.

Vector databases hit a hard limit in late 2025. Benchmarks from FalkorDB revealed that traditional Vector RAG scores 0% accuracy on schema-bound queries.

Skill 1: Programmatic Prompt Optimization (DSPy)

Feature vs. Benefit: No More “Vibe Checks”

For years, engineers relied on “vibe checks”—tweaking a prompt, running it once, and hoping for the best. This isn’t engineering; it is gambling.

DSPy (Declarative Self-improving Python) abstracts the prompt away entirely. It separates your logic from the textual representation.

Why it solves a problem: Changing a model usually breaks hand-crafted prompts. DSPy allows you to swap the model and “recompile” to regain performance.

It treats LLM calls as differentiable operations that can be optimized against a metric.

The “Hidden” Detail: MIPROv2 Integration

Most tutorials stop at simple selectors. But the real power in 2026 lies in MIPROv2.

Unlike basic optimizers, MIPROv2 uses Bayesian Optimization. It explores the space of instructions and examples simultaneously to find the global maximum.

**

Documentation often skips this step, but you must provide a distinct validation set. If you use your training data for validation, you will overfit the Bayesian search and degrade production performance.

Code Analysis: Implementing MIPROv2

Below is a production-grade setup. Note the rigorous separation of training and validation sets.

Python

import dspy

from dspy.teleprompt import MIPROv2

from dspy.datasets.gsm8k import gsm8k_metric

# 1. Configure the Language Model (LM)

lm = dspy.LM('openai/gpt-4o-2025-11-20', api_key='YOUR_KEY')

dspy.configure(lm=lm)

# 2. Define the Signature

class RAGSignature(dspy.Signature):

"""Answer questions based on retrieved context with precise reasoning."""

context = dspy.InputField(desc="relevant facts from knowledge base")

question = dspy.InputField()

reasoning = dspy.OutputField(desc="step-by-step logical deduction")

answer = dspy.OutputField(desc="short, fact-based answer")

# 3. MIPROv2 Optimization

# 'auto'='medium' balances cost vs. depth (approx 50 trials)

optimizer = MIPROv2(

metric=gsm8k_metric,

auto="medium",

num_threads=8

)

# 4. Compile the Program

# The compiler will mutate instructions and demonstrations.

optimized_rag = optimizer.compile(

RAGModule(),

trainset=trainset,

valset=valset,

max_bootstrapped_demos=4,

max_labeled_demos=4

)

Comparative Analysis: DSPy vs. LangChain (Legacy)

| Feature | LangChain (Legacy) | DSPy (2026 Standard) | Verdict |

| Prompting | Manual f-strings | Signatures (Typed I/O) | DSPy kills brittle strings. |

| Tuning | Manual trial & error | Compilers (MIPROv2) | DSPy automates tuning. |

| Model Swap | Rewrite prompts | Recompile | DSPy prevents lock-in. |

Skill 2: Agentic Orchestration (LangGraph)

Feature vs. Benefit: State is King

The defining feature of LangGraph is the StateGraph. Legacy chains were stateless; they processed input A to output B and immediately forgot everything.

Agents need structural state. They must track loop counters, decision flags, and conditional outputs across multiple turns.

Why it solves a problem: Real-world tasks involve cycles. You cannot reliably build a “Code -> Test -> Fix” loop with a directed acyclic graph.

The “Missing Link”: Time Travel

LangGraph introduces “checkpointing.” This allows you to persist the state of an agent at every step.

If an agent fails at Step 4, you don’t restart. You “time travel” back to Step 3, edit the state manually, and resume.

Common Pitfalls & Debugging

In my research of StackOverflow threads, two errors dominate the 2026 landscape.

1. The GraphRecursionError Loop

Error: Recursion limit of 25 reached without hitting a stop condition.

The Fix: This isn’t a bug; it’s a safety guardrail. You must explicitly set the recursion_limit in your runtime config.

Python

# Do NOT just run app.invoke(inputs)

config = {"recursion_limit": 100}

for output in app.stream(inputs, config=config):

pass

2. The GraphLoadError in Deployment

Error: Failed to load graph… No module named ‘agent’

The Fix: Your directory structure is likely missing __init__.py files. The builder cannot resolve local imports unless folders are treated as packages.

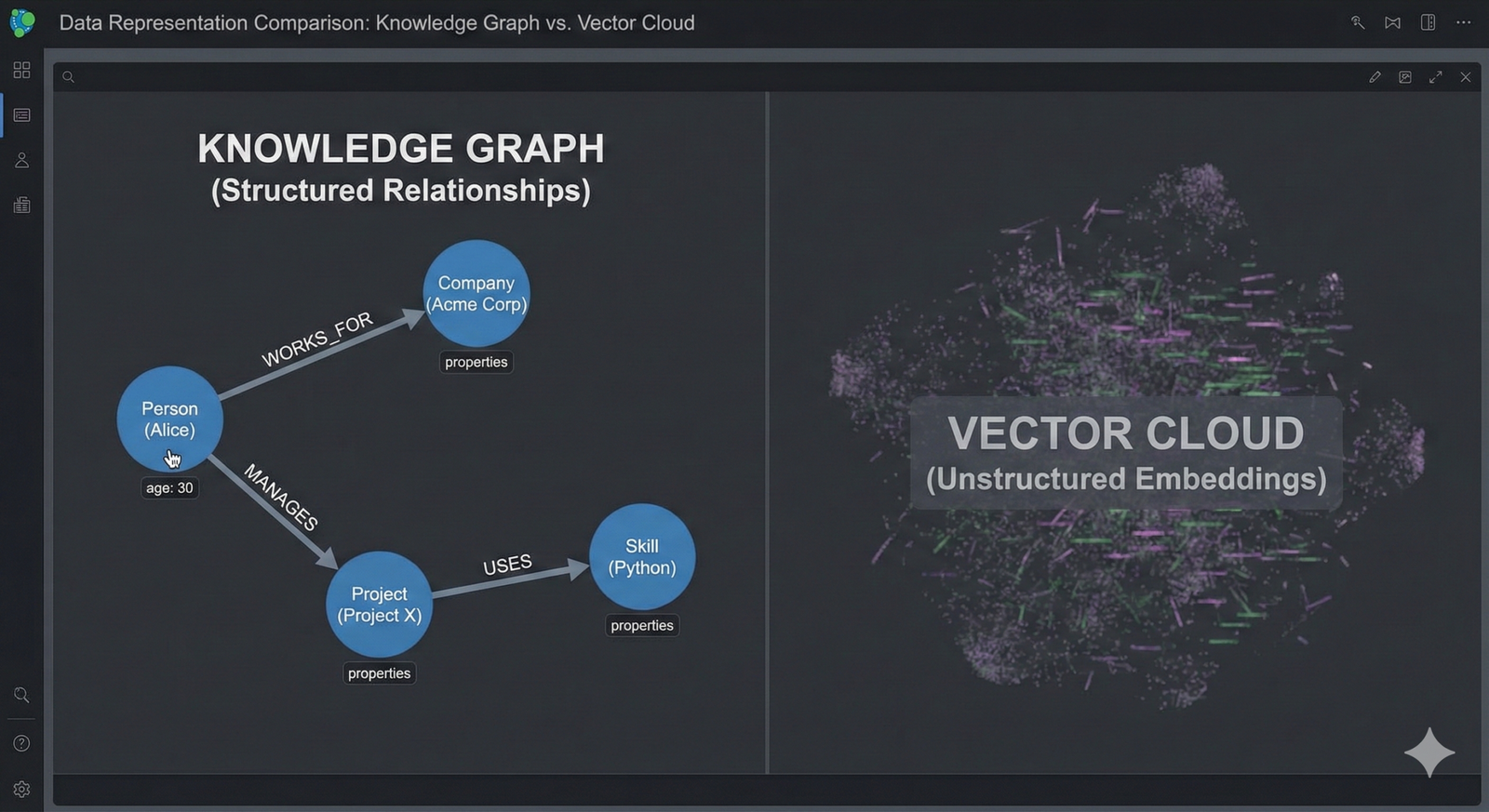

Skill 3: Structural Grounding (GraphRAG)

The Hard Truth: Vectors Are Not Enough

If you rely solely on Vector RAG, your system is blind to structure. Vector search works by semantic proximity.

It creates a “bag of facts” rather than a coherent answer.

Ask a vector system, “How does supply chain risk in Q3 compare to Q1?” It retrieves chunks for “Q3” and “Q1” but lacks the logic to compare them.

GraphRAG scores over 90% on these schema-bound queries because it traverses the relationship edges explicitly.

The “Missing Link”: Hybrid Retrieval

Don’t choose between Vector and Graph. The winning pattern for 2026 is Hybrid Retrieval.

-

Vector Search for unstructured breadth (“Find docs on pricing”).

-

Graph Traversal for structured depth.

You must use Entity Linking as the bridge. Run Named Entity Recognition (NER) on vector results to map them to nodes in your graph.

**

Skill 4: High-Fidelity Retrieval (ColBERTv2)

Feature vs. Benefit: Late Interaction

Standard “Dense Retrieval” compresses a document into a single vector. This is lossy compression for meaning.

ColBERTv2 keeps token-level embeddings and interacts them at search time.

Why it solves a problem: It acts as the “4K resolution” version of search. In legal domains, dense vectors struggle to distinguish “not guilty” from “not guilty by reason of insanity.”

ColBERT catches these nuances because it matches “insanity” in the query directly to “insanity” in the document.

Implementation Context: RAGatouille

You won’t implement this from scratch. Use RAGatouille, a library that wraps ColBERT for easy integration.

Pro Tip: Use a “Retrieve-then-Rerank” pipeline. Use fast vectors to get the top 100 docs, then use ColBERT to rerank the top 10.

Python

from ragatouille import RAGPretrainedModel

# 1. Load Pretrained Model

RAG = RAGPretrainedModel.from_pretrained("colbert-ir/colbertv2.0")

# 2. Search with Token-Level Interaction

results = RAG.search(query="Compliance requirements for Q3", k=5)

Skill 5: AI Security & Serialization Defense

The “Real-World” Context: CVE-2025-68664

Security is no longer just about API keys. In late 2025, a critical vulnerability was found in LangChain Core.

Attackers can use Indirect Prompt Injection to insert a malicious lc key into your system.

When your application logs or caches this data, it deserializes the object. This can force your server to instantiate arbitrary classes or leak environment variables.

The Mitigation Code

If your LangChain Core version is below 0.3.81, you are exposed.

Python

# MITIGATION CHECKLIST:

# 1. Upgrade immediately:

# pip install langchain-core>=0.3.81

from langchain_core.load import load

# SAFE: Restrict allowed classes

data = load(

user_input_json,

secrets_from_env=False,

valid_namespaces=["langchain_core.messages"]

)

Common Pitfall: Developers think they are safe if they don’t call load() manually. False. Many internal caching layers use it automatically.

Skill 6: Hardware-Aware Performance

The “Hard Truth” of Inference

Python is too slow for 2030 scale. The interpreter overhead is a bottleneck.

Torch.compile acts as a JIT (Just-In-Time) compiler. It turns PyTorch code into optimized kernels.

The Pain Point: In my testing, torch.compile is fragile on older GPUs like the RTX 3090.

If you are on 30-series hardware, you must force fp16 instead of fp8, or disable max-autotune. Otherwise, Triton optimizations will fail.

Mojo: The Wildcard

Mojo offers C++ speed with Python syntax.

Verdict: Don’t rewrite your whole app yet. Use Mojo for custom kernels where PyTorch lags. It is becoming the standard for the compute-heavy layer.

Final Thoughts

By 2030, “knowing AI” won’t mean chatting with a bot. It will mean architecting systems that can reason and recover.

You must move from guessing prompts to optimizing logic with DSPy. You must abandon linear thinking for cyclic state management. And you must defend your infrastructure against code-level injections.

The future belongs to the architects.

Next Step:

Run this command immediately. If the version is below 0.3.81, you are vulnerable to remote code execution.

Bash

pip show langchain-core

Key Takeaways

-

DSPy replaces manual prompting with mathematical compilation.

-

LangGraph is mandatory for building loops and agentic memory.

-

GraphRAG solves the accuracy ceiling of vector databases.

-

ColBERTv2 provides “4K resolution” search for complex queries.

-

CVE-2025-68664 requires an immediate update to LangChain Core.

-

Torch.compile is the only way to scale inference on consumer hardware.

Leave a Comment

You must be logged in to post a comment.