The technological landscape of 2026 has moved far beyond the speculative “hype cycles” of the early 2020s. We aren’t just shocked by generative AI anymore; we are in a phase defined by rigorous, unglamorous operational work.

In 2024, the industry was captivated by chatbots. By 2026, the focus has shifted entirely to “The Synthesist.” This isn’t about mastering a single tool. It’s about weaving disparate, volatile technologies into reliable production systems.

The Bottom Line

Stop chasing “Prompt Engineering” and generic coding roles. The money in 2026 is in infrastructure, security, and physical integration. Focus on “Agentic Orchestration” (making AI loop and fix itself), “Post-Quantum Migration” (saving encryption), and “OT Security” (protecting factories). If you can’t build systems that survive a server crash, you aren’t employable.

1. Introduction: The Transition to the Synthesist Era

Gartner’s strategic outlook for 2026 identifies a world where no single capability is sufficient. Success now requires integrating AI supercomputing, post-quantum cryptography, and autonomous workflows.

But here is the hard truth: the generalist “Prompt Engineer” has dissolved. It fractured into the highly technical Agentic AI Orchestration Engineer and the forensic AI Red Teamer.

Similarly, the “Cloud Architect” role has evolved. It is now the “FinOps & Sustainability Analyst,” forcing engineers to reckon with billion-dollar AI training clusters and carbon mandates.

This guide explores the specific “pain points” keeping developers awake at night. We will look at everything from the dependency hell of Python framework churn to the thermal throttling of spatial headsets.

2. Agentic AI Orchestration Engineer

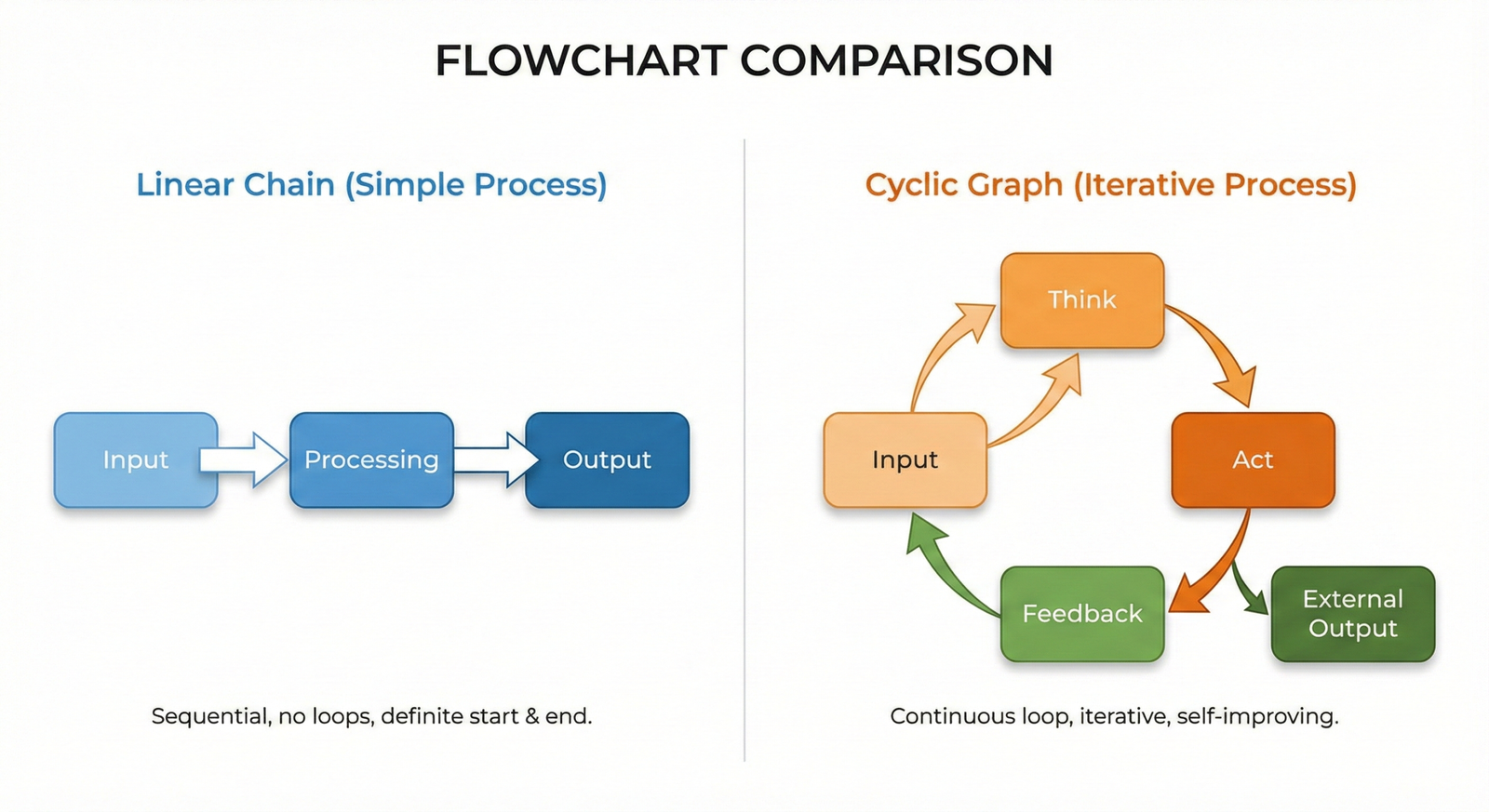

The Evolution from Linear Chains to Cyclic Cognition

The discipline formerly known as “Prompt Engineering” has undergone a ruthless metamorphosis. By 2026, crafting clever text inputs is obsolete.

The industry has pivoted to designing Multi-Agent Systems (MAS). In these systems, autonomous agents collaborate, plan, and execute tools. Crucially, they must maintain state over time.

The Technical Reality: Cyclic Graphs and State Machines

The “Missing Link” that separates employable engineers in 2026 is the mastery of cyclic, stateful architectures. Early LLM apps relied on linear chains where data flowed strictly from Input to Output.

These brittle systems failed in production because they couldn’t retry or recover from errors. If a tool call failed, the whole process died.

-

Perceive: The agent receives an input or observes a tool output.

-

Update State: The agent updates a persistent memory object.

-

Decide: Based on the state, the agent loops back to retry or asks for human help.

-

Act: The agent executes the node.

**

Developer Pain Points: The “Dependency Hell” of 2026

Deep research into StackOverflow reveals a major friction point: managing the volatile ecosystem of AI libraries. Updates to core libraries like langchain-core frequently break downstream agent implementations.

Specific Pain Point: Developers report issues with “Non-Deterministic Loops.” An agent configured to “fix code until it works” can burn through your entire API budget in minutes if it gets stuck in a loop.

The Fix: Top-tier engineers implement “Maximum Recursion Depth” safeguards within the graph topology. They also utilize “State Reducers” to prune message history, preventing context window bloat from degrading model performance.

3. AI Security & Red Teaming Specialist (LLM Sec)

Securing the Non-Deterministic Attack Surface

As AI agents gain the permission to execute SQL queries and send emails, the security paradigm has shifted. We are no longer just protecting data; we are protecting intent.

The AI Security Specialist focuses on “Red Teaming.” This involves adversarially attacking your own systems to uncover vulnerabilities like Excessive Agency before hackers do.

The “Missing Link”: System Prompt Leakage

While “Jailbreaking” gets the headlines, the insidious threat in 2026 is Indirect Prompt Injection.

In this attack, the adversary doesn’t talk to the chatbot. They embed a malicious instruction inside a resume PDF or a webpage. When the AI “reads” the document, it unknowingly executes the command.

Traditional input sanitization is mathematically impossible for LLMs. Defense requires Architectural Segmentation, creating a firewall between user data and system instructions.

Strategic Insight: The Rise of PyRIT

Microsoft’s Python Risk Identification Toolkit (PyRIT) is now the standard for enterprise red teaming. Unlike simple scanners, PyRIT supports multi-turn, automated attacks.

You configure an “Attacker Bot” to converse with your “Target Bot.” The Attacker uses social engineering to trick the Target into violating safety guidelines. A third “Evaluator Model” scores the breach.

Developer Pain Points: False Positives

Security teams today struggle with False Positives from automated scanners. A creative writing tool generating a villain’s monologue might be flagged as “hate speech,” blocking deployment.

To fix this, specialists must tune sensitivity thresholds. They also implement “Human-in-the-Loop” verification for high-severity alerts to avoid stifling innovation.

4. Cloud FinOps & Sustainability Analyst

Taming the Unit Economics of AI

Cloud bills have transformed from manageable expenses into erratic, billion-dollar liabilities. The Cloud FinOps Analyst of 2026 is an engineer-economist.

They answer the critical question: “What is the ROI of that specific AI training run?” This role also converges with sustainability, tracking carbon intensity alongside cost.

The “Missing Link”: FOCUS 1.3 and SaaS Normalization

The big shift in late 2025 was the adoption of the FinOps Open Cost and Usage Specification (FOCUS).

Before FOCUS, correlating spend across AWS, Azure, and Snowflake was a nightmare. Columns like BlendedCost and PreTaxCost didn’t match. FOCUS 1.3 normalizes these into a single schema.

The high-value skill here is building ETL pipelines that ingest raw billing files. This “SaaS Normalization” allows companies to see 100% of their variable spend in one dashboard.

Developer Pain Points: The “Unallocated” Spend Crisis

A major friction point is “Unallocated Spend”—costs from shared resources like Kubernetes clusters that no single team owns.

The Optimization: Analysts must implement Label-Based Cost Allocation. Tools like Kubecost help break down spend by namespace, allowing you to charge costs back to specific engineering teams.

5. Post-Quantum Cryptography (PQC) Migration Specialist

The Race Against Q-Day

The threat of a quantum computer breaking encryption has catalyzed a massive migration. The “Harvest Now, Decrypt Later” attack means data stolen today is already at risk.

The PQC Migration Specialist is the architect responsible for transitioning infrastructure to the new NIST standards finalized in 2025.

The Technical Stack: Hybrid Mode

The NIST standards are set: ML-KEM (Kyber) for keys and ML-DSA (Dilithium) for signatures. But you can’t just swap them out.

You need to run Hybrid Mode. This involves running both classical ECC and new PQC algorithms simultaneously to maintain security during the transition.

The “Missing Link”: The Performance Bottleneck

Here is the kicker: Post-quantum keys are heavy. A Dilithium signature is 2.4KB, compared to an RSA signature of just 0.5KB.

In high-traffic environments, this payload size causes packet fragmentation. It increases handshake latency and degrades the user experience.

Expert specialists optimize Maximum Transmission Unit (MTU) settings. They also implement Certificate Compression techniques to handle the extra load without crashing the network.

6. Bioinformatics Pipeline Engineer

Engineering the Genomic Data Tsunami

Bioinformatics is now a heavy engineering discipline. With tools like AlphaFold 3, the bottleneck is no longer data generation; it’s processing.

The Bioinformatics Pipeline Engineer builds the scalable workflows necessary to analyze petabytes of biological data.

The “Missing Link”: Nextflow DSL2 and Strict Syntax

The industry standard is now Nextflow, specifically DSL2 with Strict Syntax enabled.

Legacy pipelines were monolithic scripts that were impossible to debug. Nextflow DSL2 introduces modularization, allowing you to import and reuse code blocks.

The introduction of Strict Syntax in Nextflow 25.10 allows you to catch errors at compile time. For a pipeline that runs for three days, this saves massive amounts of compute resources.

Developer Pain Points: The “Resume” Failure

A frequent complaint is the failure of the “-resume” functionality. When a cloud pipeline crashes after 72 hours, restarting from scratch is expensive.

The Fix: Engineers must ensure deterministic file inputs. They avoid “pollution” of the work directory and write processes that are idempotent, meaning they can be re-run without side effects.

**

7. TinyML & Edge AI Engineer

Intelligence at the Edge

TinyML involves running machine learning on microcontrollers with only kilobytes of RAM. In 2026, this is exploding due to privacy needs and zero-latency requirements in IoT.

The Technical Stack: LiteRT and NPU Acceleration

Google has rebranded TensorFlow Lite to LiteRT (Lite Runtime). The critical skill is utilizing Delegates to offload computation to NPUs (Neural Processing Units).

Standard models often fail on these accelerators because they use unsupported operations. An expert knows how to profile a model and implement “fallback” mechanisms to the CPU.

Deep Dive: Quantization-Aware Training (QAT)

Most developers try “Post-Training Quantization,” but it kills accuracy.

The pro move is Quantization-Aware Training (QAT). This simulates the rounding errors of 8-bit hardware during the training phase. The model learns to be robust to the constraints of the chip.

Developer Pain Points: Memory Leaks

A specific hurdle on chips like the ESP32 is memory management. Improper tensor buffering leads to stack overflows and device crashes.

The Solution: You must utilize Static Memory Allocation. This pre-allocates a “tensor arena” of fixed size, preventing memory fragmentation during runtime.

8. OT/ICS Security Specialist

Defending the Physical Infrastructure

Operational Technology (OT) runs factories and power grids. As these systems connect to the internet, they become prime targets for ransomware.

The “Missing Link”: The Shift to “Safe Active” Scanning

Historically, the golden rule was “passive scanning only.” Active pinging could crash fragile legacy PLCs.

But passive scanning misses dormant devices. The industry is moving toward “Safe Active” Scanning. This involves using protocol-specific queries that are known to be safe for specific vendors.

Instead of a blind sweep, you speak the native language of the device (e.g., EtherNet/IP) to query specific registers.

Developer Pain Points: The Patching Paradox

You cannot simply reboot a blast furnace to apply a Windows update.

The Solution: Virtual Patching. You configure an industrial firewall to block the specific exploit attempting to target the vulnerability. This protects the asset without requiring downtime.

9. Synthetic Data & Sim-to-Real Engineer

Building the Matrix for Robots

Robots need millions of hours of training data. Synthetic Data Engineers build photorealistic virtual worlds to train AI agents before they touch a real robot.

The Technical Stack: Isaac Sim

The primary tool is NVIDIA Isaac Sim. But the challenge is the Sim-to-Real Gap—a robot trained in simulation often fails in the real world.

The solution is Domain Randomization (DR). You script the simulation to randomly vary lighting, friction, and mass.

The art lies in tuning the distribution of these randomizations. Too much noise prevents learning; too little leads to overfitting.

Developer Pain Points: Physics Instability

A common frustration is “Physics Instability”—simulations exploding due to bad contact settings.

The Fix: Expert engineers tune the Physics Solver parameters. They adjust contact_offset and solver_position_iterations to ensure stable interactions.

10. Spatial Computing / Industrial Metaverse Developer

The Industrial Interface of the Future

While consumer VR fluctuates, the Industrial Metaverse is steady. Developers use Unity to build interfaces for the Apple Vision Pro and HoloLens.

The “Missing Link”: Unity Industry License Constraints

A major detail for 2026 is the strict enforcement of the Unity Industry license.

Developers often prototype using Unity Pro. However, if your revenue exceeds $1 million, you are forced into the expensive Industry license ($4,950+ per seat).

Studios must budget for this cost early. This has led some to explore open-source alternatives like Godot, though Unity remains dominant for mobile AR.

Technical Challenges: Thermal Throttling

Rendering high-fidelity CAD models can overheat standalone headsets.

The Tech Shift: Unity 6 introduces PolySpatial. Developers must master aggressive Level of Detail (LOD) management and Occlusion Culling to keep the device cool.

11. Smart Energy & Carbon Data Analyst

The Data Infrastructure of Net Zero

As companies face mandates to report emissions, the Smart Energy Data Analyst has become essential. This role bridges data science and environmental expertise.

The “Missing Link”: Breaking API Limits

A specific hurdle is extracting data from platforms like Watershed. These platforms often have strict API rate limits.

The analyst builds “Middleware Connectors” using Python. These extract data from legacy ERP systems, normalize it, and batch-upload it to the carbon platform.

Developer Pain Points: Scope 3 Data

The hardest part is Scope 3—emissions from the supply chain. Collecting this usually involves chasing suppliers for messy Excel sheets.

The Strategy: Move from “Spend-Based” calculations to “Activity-Based” ones. Building Supplier Portals with strict input validation ensures every reported carbon number is audit-ready.

Comparative Analysis: Which Role is Right for You?

| Career Path | Primary Technical Shift | The “Missing Link” Skill | Verdict |

| Agentic AI | Prompt Eng. → Orchestration | Stateful Cyclic Graphs | Best for Python devs who love logic. |

| AI Security | Scanning → Red Teaming | System Prompt Leakage | Best for security pros who like breaking things. |

| Cloud FinOps | Cost → Unit Economics | FOCUS 1.3 ETL | Best for data analysts who like money. |

| PQC Migration | Algorithms → Hybrid Mode | TLS Optimization | Best for deep backend/network engineers. |

| TinyML | Cloud → Edge NPU | Quantization-Aware Training | Best for embedded C++ engineers. |

12. Conclusion: The Path Forward

The careers defined here share a common thread: they occupy the “infrastructure layer.” They aren’t about the easy tasks AI can automate. They are about the hard problems of integration, security, and reality.

Key Takeaways:

-

Embrace Complexity: Don’t shy away from “Hybrid Mode” encryption or “Cyclic Graph” architectures. That is where the value is.

-

Master the Standards: Read the boring documents (FOCUS Spec, OWASP LLM Top 10). They define the rules of the game.

-

Build Systems, Not Scripts: Move beyond isolated code. Build stateful systems that persist, recover, and integrate.

By targeting these specific “Missing Links,” you position yourself as one of the architects essential to the operation of the real world in 2026.

Leave a Comment

You must be logged in to post a comment.