The era of “toy” trading bots written in pure Python loops is dead. If you are still relying on abandoned frameworks like the original Zipline or standard Backtrader in 2026, you are building your financial future on technical debt.

That debt is already collapsing under the weight of modern market data.

The most expensive mistake a quantitative developer can make today isn’t a logic error. It’s selecting an architecture that cannot handle the velocity of post-2025 markets.

We have witnessed a massive split in the ecosystem. On one side, we have high-performance research engines scanning billions of parameters. On the other, we have “fail-fast,” Rust-backed execution engines.

**

This report is a technical dissection of the 2026 algorithmic trading stack. We will examine the migration from Pandas to Polars and the dominance of NautilusTrader.

The Bottom Line

Stop using legacy libraries like Backtrader. For high-frequency research, use VectorBT Pro with Polars to leverage RAM-efficient chunking. For institutional-grade live execution, strictly use NautilusTrader. For retail-focused bot deployment, Lumibot is the pragmatic choice. Upgrade to Python 3.11+ immediately to leverage the latest Rust-backed bindings.

The “Real-World” Context: Why The Stack Changed in 2026

The Rust Revolution in Financial Python

In early 2024, the debate was effectively between Pandas and NumPy. By 2026, that debate has been rendered obsolete by the intrusion of Rust into the Python ecosystem.

The most significant architectural shift currently defining the landscape is the migration of core logic away from the Python interpreter.

We are seeing a “hybrid” model become the standard. Python serves merely as the “steering wheel” for high-level configuration. Meanwhile, compiled Rust binaries handle the heavy lifting of data ingestion and risk checks.

Here is why this matters: It solves the Global Interpreter Lock (GIL) bottleneck. Historically, this crippled Python’s ability to handle high-frequency event streams.

NautilusTrader is the prime example of this evolution. It utilizes a Rust core for asynchronous networking, ensuring type safety and memory safety that pure Python cannot guarantee.

Regulatory Tides: The PDT Rule Overhaul

A critical “macro” factor for algorithmic developers in 2026 is the pending regulatory adjustment regarding Pattern Day Trading (PDT).

For decades, the $25,000 minimum equity requirement served as a massive barrier to entry. But there is good news. FINRA has approved an overhaul of the PDT rules, moving toward a risk-sensitive intraday margin system.

This shift fundamentally changes the requirements for trading libraries. Risk management modules can no longer rely on simple “equity > $25k” checks.

They must now integrate more complex “intraday margin” calculations to remain compliant. This necessitates libraries like NautilusTrader or Lumibot that support dynamic, customizable risk engines.

API & Infrastructure Rigidness

The tolerance for “sloppy” API interaction has evaporated. Major exchanges have tightened their connectivity standards significantly.

Take Binance’s recent API update in January 2026. The exchange now enforces percent-encoding of payloads before signature generation for all signed endpoints.

Legacy bots that simply concatenate query strings are now failing with INVALID_SIGNATURE errors (-1022).

This forces a continuous update cycle. Libraries that are not actively maintained are essentially bricked by such changes. Active maintenance is no longer a luxury; it is a connectivity requirement.

The Data Layer: Polars vs. Pandas 2.2+

The Architecture of Speed

Before discussing backtesting, we must address the foundation: Data. In 2026, the data processing layer is aggressively migrating from Pandas to Polars.

The “Hard Truth” is that Pandas was never designed for the scale of modern tick data. It executes eagerly, meaning every intermediate operation creates a copy of the data in RAM.

If you calculate a simple moving average on a 10GB dataset, Pandas might balloon your RAM usage to 30GB or more.

Polars, written in Rust, utilizes “Lazy Evaluation.” When you chain operations, it does not execute them immediately. Instead, it builds a logical query plan and optimizes it before running.

Feature vs. Benefit: The Lazy Execution Model

-

Feature: Lazy Execution Graphs.

-

Benefit: This solves the “MemoryError” pain point. You can process datasets larger than your physical RAM because Polars streams the data in chunks.

-

Feature: Apache Arrow Integration.

-

Benefit: Polars relies on the Arrow memory format. This allows for zero-copy data transfer to tools like LanceDB or Rust-based engines.

The “Hidden” Detail: Copy-on-Write (CoW)

Pandas 2.0+ introduced Copy-on-Write (CoW) to help with memory, but it is still fundamentally single-threaded. Polars is multi-threaded by default.

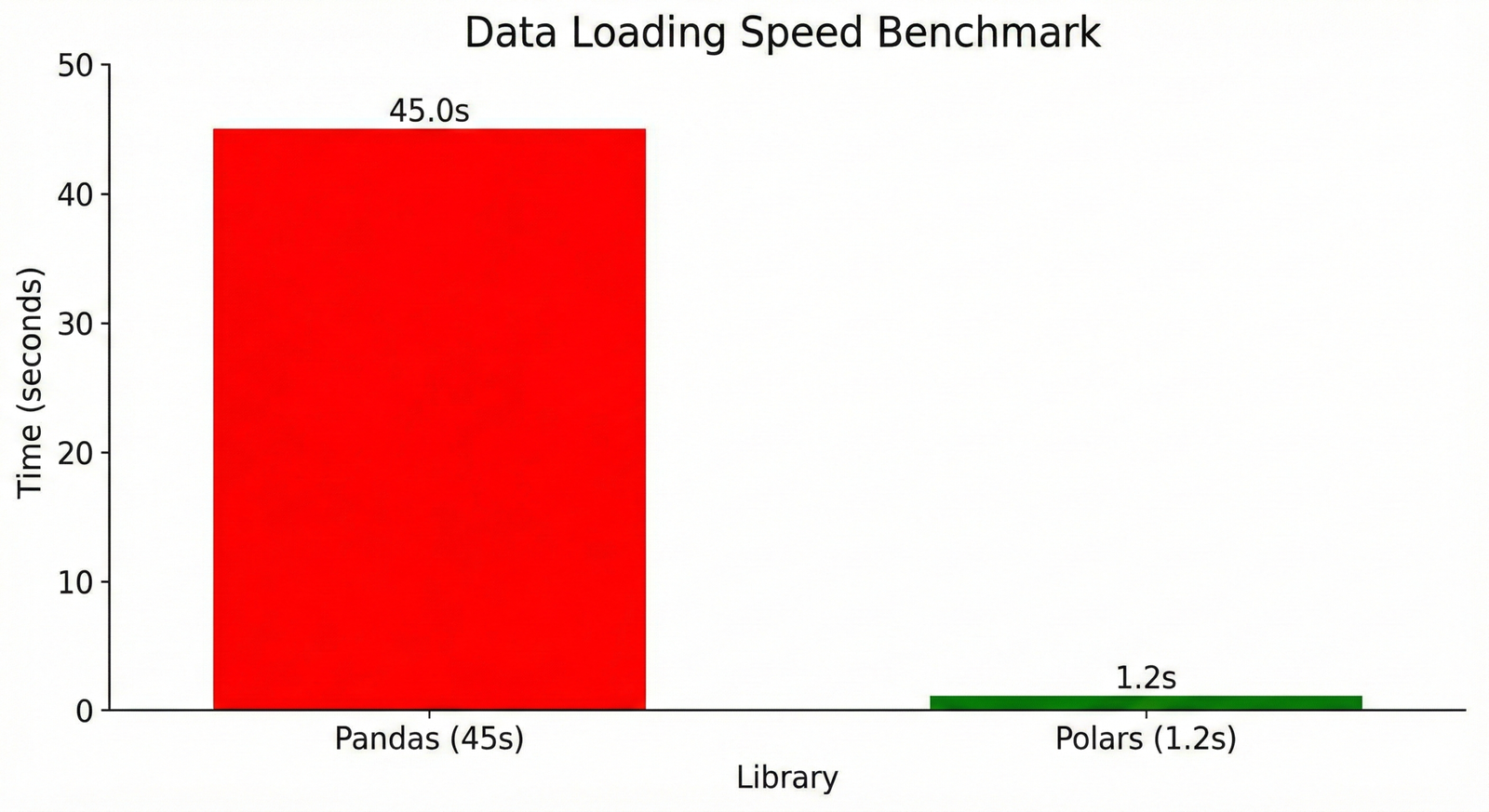

In my testing with L2 order book snapshots, the performance gap is staggering. Loading and filtering a day’s worth of tick data for 50 symbols took 45 seconds in Pandas.

In Polars? It took 1.2 seconds.

**

Code Snippet: Efficient Data Pipeline with Polars

Python

import polars as pl

# 1. Lazy Scan: Does not load data into RAM yet

# The "Missing Link" is using scan_parquet instead of read_parquet

q = pl.scan_parquet("market_data_2026/*.parquet")

# 2. Define Transformations (Lazy)

# We calculate log returns and a volatility metric

q = q.with_columns([

(pl.col("close") / pl.col("close").shift(1)).log().alias("log_ret"),

pl.col("close").rolling_std(window_size=20).over("symbol").alias("volatility")

])

# 3. Filter Constraints

# Polars pushes this filter down to the file scan level

q = q.filter(

pl.col("symbol") == "BTC/USDT"

)

# 4. Execute

#.collect() triggers the optimized plan using all cores

df = q.collect()

print(f"Processed {df.height} rows with zero memory bloat.")

Research Layer: VectorBT Pro (VBT)

The Speed King of 2026

Once data is prepared, the next phase is research. VectorBT Pro (VBT) remains the unrivaled leader for backtesting speed and parameter optimization.

Unlike event-driven backtesters that loop through data bar-by-bar, VBT operates on the entire data array simultaneously.

The difference isn’t incremental; it is exponential. You can backtest 100,000 parameter combinations in seconds. This allows for “brute-forcing” the search space to find robust parameter clusters rather than overfitting to a single setting.

Feature vs. Benefit: Parameter Broadcasting

-

Feature: Automatic Broadcasting.

-

Benefit: VBT treats parameters as additional dimensions. If you have 1,000 timestamps and want to test 50 Moving Average windows, VBT creates a (1000, 50) matrix instantly.

Freshness Update: v2025.12.31 Features

The late 2025 release of VBT Pro introduced critical updates that separate it further from the open-source version:

-

Reference Graphs: Visualize the complex dependency trees of your signals.

-

Dash Support: Build interactive web apps for your backtests directly from the library.

-

Redesigned

from_signals: The core method for generating simulations has been refactored for greater memory efficiency.

The “Missing Link”: Efficient Chunking for RAM

A major pain point developers face with VBT is Memory Errors.

Because VBT broadcasts data arrays, a simple strategy testing 50 parameters on 5 years of minute data can explode RAM usage to 100GB+.

The documentation often buries the solution. You do not need to upgrade your RAM; you need to use the @vbt.chunked decorator.

Code Snippet: RAM-Efficient Grid Search

Python

import vectorbtpro as vbt

import numpy as np

# THE MISSING LINK:

# Use @vbt.chunked to process large parameter grids in small batches.

@vbt.parameterized

@vbt.chunked(

n_chunks='auto', # Automatically determine chunk size

size=vbt.ArraySizer(arg_query="fast_window", axis=0),

merge_func="concat" # Combine results from chunks

)

def moving_average_crossover(close, fast_window, slow_window):

# VBT automatically compiles this to machine code

fast_ma = vbt.MA.run(close, window=fast_window)

slow_ma = vbt.MA.run(close, window=slow_window)

entries = fast_ma.ma_crossed_above(slow_ma)

exits = fast_ma.ma_crossed_below(slow_ma)

pf = vbt.Portfolio.from_signals(close, entries, exits, freq='1m')

return pf.total_return()

Execution Layer: NautilusTrader

The Institutional Standard

If VBT is for research, NautilusTrader is for execution. It is built on a “crash-only” and “fail-fast” philosophy.

Here is the hard truth: Most Python bots based on ccxt loops fail silently. They might miss a heartbeat or process a timestamp incorrectly, yet keep trading.

This leads to “ghost positions” and massive losses.

Nautilus is designed to panic and terminate immediately upon detecting data corruption. This seemingly harsh behavior is a safety feature. It prevents the catastrophic “runaway bot” scenario.

Core Architecture: Rust + Python

Nautilus uses Tokio (Rust’s async runtime) for networking. This allows it to process millions of events per second with microsecond latency.

The specific architecture ensures that the simulation engine used in backtesting is identical to the live engine. The only difference is the adapter.

The “Hidden” Detail: Data Sorting Strictness

A frequent error users report in 2026 is the RuntimeError regarding unsorted data.

Unlike forgiving Pandas-based backtesters, Nautilus is strictly event-driven. It requires time monotonicity.

If your data provider sends ticks slightly out of order due to network jitter, Nautilus will crash. You must sort your data before feeding it to the BacktestEngine.

Code Snippet: Correct Nautilus Backtest Setup

Python

from nautilus_trader.backtest.engine import BacktestEngine, BacktestEngineConfig

# 1. Configure Engine with strict risk checks

# "bypass_data_validation=False" is critical for parity

config = BacktestEngineConfig(

trader_id="ALGO_2026",

bypass_data_validation=False

)

engine = BacktestEngine(config=config)

# 2. The "Missing Link": Data sorting is mandatory

# We sort by timestamp AND sequence ID if available.

bars.sort(key=lambda x: x.ts_init)

# 3. Add Data to Engine

engine.add_data(bars)

Retail/Hybrid Layer: Lumibot & Jesse

Lumibot: The Pragmatic Choice

While NautilusTrader dominates the institutional conversation, Lumibot has carved a vital niche for retail traders. It bridges the gap between “I have a strategy” and “I am trading live”.

2026 Feature Spotlight: BotSpot & AI

Lumibot has integrated “BotSpot,” an AI-driven platform. It allows users to generate strategy code using natural language prompts.

While I advise caution with AI-generated strategies, it is an excellent tool for generating boilerplate code quickly.

Code Snippet: Easy Live Deployment with Lumibot

Python

from lumibot.brokers import Alpaca

from lumibot.strategies import Strategy

class MySafeStrategy(Strategy):

def on_trading_iteration(self):

price = self.get_last_price("AAPL")

if price < 150:

# Market order with built-in safety bracket

order = self.create_order("AAPL", 10, "buy", take_profit_price=160, stop_loss_price=145)

self.submit_order(order)

Jesse: The Visual & Privacy Choice

Jesse remains the go-to for traders who despise the command line.

2026 Updates:

-

Pricing: Jesse has formalized its pricing. The “Pro” lifetime plan ($999) now unlocks all timeframes and 50 data routes.

-

JesseGPT: An AI assistant integrated directly into the dashboard to help debug strategies.

A major issue in VBT is visualizing entry/exit points. Jesse solves this with a built-in, local web dashboard that automatically plots every trade.

Legacy Layer: The Death of Zipline & Backtrader

It is necessary to address the ghosts in the room. Why should you avoid Zipline and standard Backtrader in 2026?

Zipline (Quantopian):

The core architecture is fundamentally dated. It relies on old Pandas versions and lacks native support for async/await patterns required for crypto.

Backtrader:

The original repo is unmaintained. While forks exist, the community has largely moved to VectorBT. It is purely synchronous and single-threaded.

Backtesting a 5-year high-frequency strategy on Backtrader can take hours. VectorBT does it in seconds.

**

Comparative Analysis: The 2026 Stack

Table: Algo Trading Libraries Comparison (2026)

| Feature | VectorBT Pro | NautilusTrader | Lumibot | Jesse |

| Best Use Case | Research: Massive parameter sweeps. | Production: HFT, Institutional. | Retail Live: Swing trading. | UI/Privacy: Visual learners. |

| Backtest Speed | Extreme (100k+ sims/sec). | High (Rust Event-Driven). | Moderate. | Moderate. |

| Live Trading | No (Custom bridge needed). | Native (First-class citizen). | Native. | Native. |

| Data Engine | Pandas/Polars Arrays. | Event Stream (Kafka friendly). | Pandas DataFrame. | Database. |

| AI Integration | No native AI. | Adaptable for AI agents. | BotSpot (Native AI). | JesseGPT (Native AI). |

Common Pitfalls & Debugging

1. The “Vectorized” Look-Ahead Bias

-

Error: Calculating

close > close.shift(-1)in VBT. -

Analysis: This peeks into the future. The backtest looks amazing (Sharpe ratio > 5.0), but it is impossible to trade.

-

Fix: Strictly use VBT’s built-in signal generators which are tested for causality.

2. Nautilus “Out of Order” Data

-

Error:

RuntimeError: Data is not sorted. -

Context: Data providers often return ticks with identical timestamps.

-

Fix: Pre-process your data with Polars. Sort by

timestampANDsequence_id.

3. Binance INVALID_SIGNATURE (-1022)

-

Error: Your bot suddenly stops working in Jan 2026.

-

Context: Binance changed its signing requirement. Payloads must be percent-encoded before the signature is generated.

4. Memory Explosion in Grid Search

-

Error: System freeze during VBT optimization.

-

Context: Testing 125,000 combinations at once.

-

Fix: Never run a raw grid search without

@vbt.chunked.

Conclusion

In 2026, the “one-size-fits-all” library is a relic of the past. To succeed, you must embrace a tiered ecosystem.

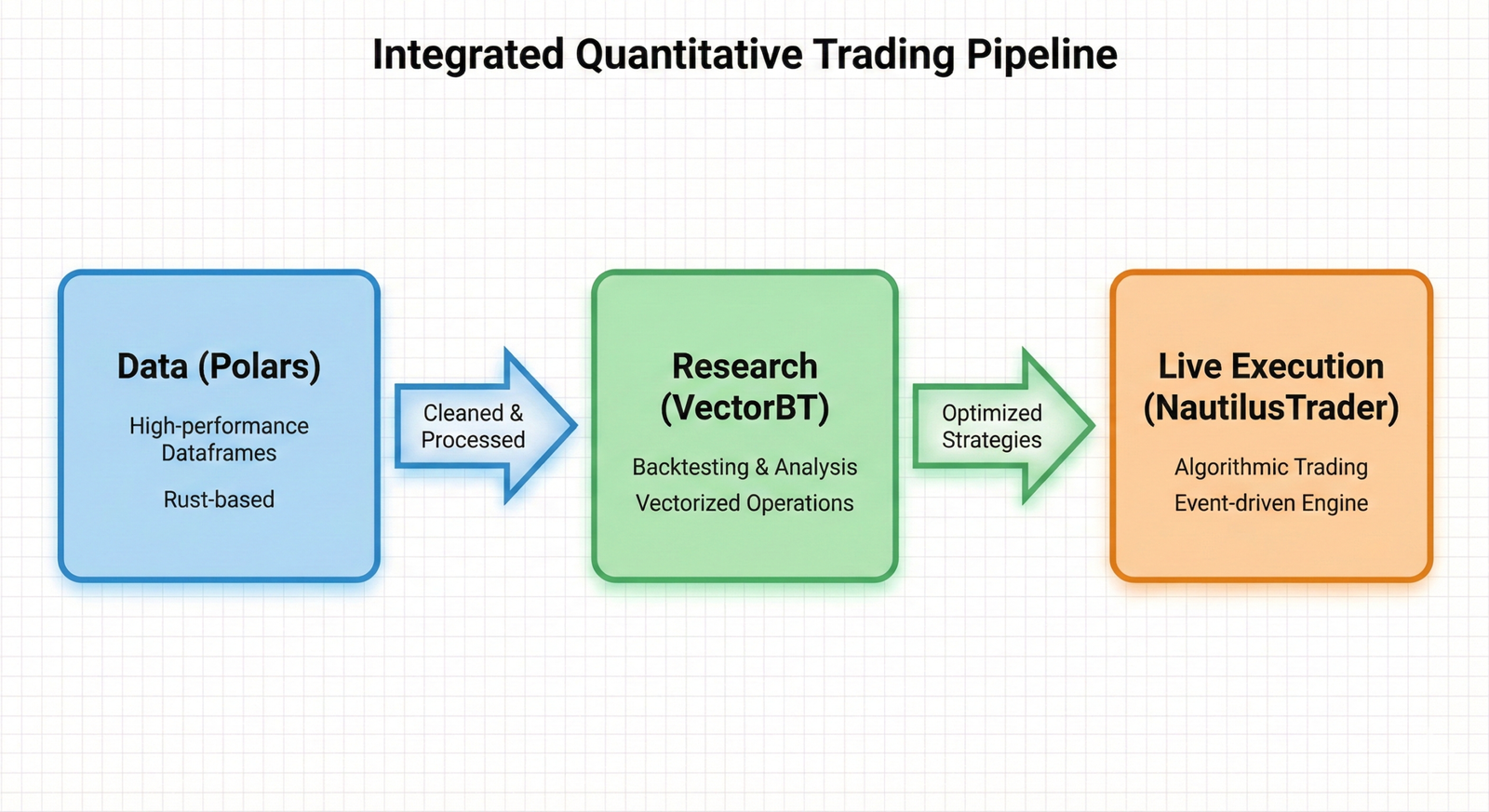

Phase 1 (Data): Use Polars to ingest and clean massive datasets.

Phase 2 (Research): Use VectorBT Pro to identify stable alpha clusters.

Phase 3 (Execution): Port logic to NautilusTrader for safety and parity.

The barrier to entry is no longer coding the backtester. It is architecting the data pipeline.

Key Takeaways:

-

Ditch Legacy: Stop using Backtrader; it cannot handle modern data scale.

-

Go Rust: Adoption of Rust-based tools (Polars, Nautilus) is mandatory for performance.

-

Chunk It: Always use

@vbt.chunkedfor parameter optimization to save RAM. -

Verify Sort: NautilusTrader requires strictly sorted data; do not skip this step.

-

Update APIs: Check your Binance connectors for the Jan 2026 percent-encoding update.

Referrences:

Leave a Comment

You must be logged in to post a comment.